- The IT department IT i forskning, formidling og utdanning FFU(NO), or Division for Research, Dissemination and Education (RDE)(EN), is responsible for delivering IT support for research at University of Oslo.

- The division's departments operate infrastructure for research, and support researchers in the use of computational resources, data storage, application portals, parallelization and optimizing of code, and advanced user support.

- Announcement of this newsletter is done on the hpc-users mailing list. To join the hpc-users list, send a mail to sympa@usit.uio.no with subject "subscribe hpc-users Mr Fox" (if your name is Mr Fox). The newsletter will be issued at least twice a year.

Summer is over and we're getting ready for the last stretch of 2024. We hope you enjoyed the summer and have your batteries fully charged before the fall semester. And if you want to top up your batteries even more with some HPC news, please read on!

Colossus upgraded

The operating system on Colossus has been upgraded to Rocky 9. Also, compute nodes have been moved and the network has been reconfigured. This will make it easier to develop services using HPC as a backend in the future.

The module software has been recompiled for Rocky 9. All projects have been given a new Linux VM running RHEL 9 for submitting jobs. They have been renamed from pXX-submit to pXX-hpc-01. Any software that you have built yourself will most likely need to be recompiled.

Apptainer (formerly known as Singularity) is no longer a module, but is installed on the compute nodes directly (like on the submit hosts). Please remove the "module load apptainer" or "module load singularity" command from any job scripts that use it.

Slurm User Group 2024 hosted at UiO

UiO will be hosting the annual Slurm User Group meeting this year (SLUG24), at September 11–13, with participants from several of the biggest HPC clusters in the world, and possibly from some of the smallest as well. See https://slug24.splashthat.com for information and registration if you are interested in attending.

UiO will be hosting the annual Slurm User Group meeting this year (SLUG24), at September 11–13, with participants from several of the biggest HPC clusters in the world, and possibly from some of the smallest as well. See https://slug24.splashthat.com for information and registration if you are interested in attending.

New NRIS supercomputer ordered

The new NRIS supercomputer (project name A2) will be delivered by HPE, to be installed during the spring and summer of 2025.

This will be the first HPC system in the NRIS ecosystem that can handle large accelerator driven jobs, and aims to be a future proof tool for the various requirements in modern data driven research.

Specs:

HPE Cray Supercomputing EX system equipped with 252 nodes, each with two AMD EPYC Turin CPUs. Each of these consists of 128 cores. In total, the system will consist of 64,512 CPU cores. In addition, the system will be delivered with 76 GPU nodes, each comprising of 4 NVIDIA GraceHopper Superchips (NVIDIA GH200 96 GB) in total, 304 GPUs. Supercomputer interconnection will be managed by HPE Slingshot Interconnect, while HPE Cray ClusterStor E1000 storage system provides 5.3 petabytes (PB) of storage.

All the compute nodes are placed in two HPE Cray Supercomputing EX4000 cabinets, both of which are fully filled and will weigh approximately four tons each. The compute nodes are directly liquid-cooled for the best possible heat transfer. This also provides the best possible potential for heat recovery. The contract also includes the possibility of expanding the system in the future, with 119,808 CPU cores and/or 224 GPUs.

Sigma2 cost for UiO-users

As was mentioned in the previous newsletter, during the renegotiation of the Sigma2-BOTT-RCN agreement, UiO managed to lower our cost from 2023 onwards to approximately 24MNOK instead of the planned 37,5MNOK. The short story is that UIO, as all other users, will pay the actual operational costs, but we will pay up-front as a guarantee, and not per use.

It is of utmost importance that all researchers and especially those at UiO are aware of the actual operational cost connected to their work, and that you try to apply for external funding to cover your part of the operational cost. How much, and if, you will have to pay for your operational costs directly from your project depends on your Faculty and how they choose to handle the invoice.

Centrally at UIO the administration will shave a significant part of the invoice off, this will function as a “centrally covered discount” before the remainder of the invoice is sent to the Faculties and Museums based on their usage.

Also notice that when applying for EU projects where Sigma2 resources are involved, for planning purposes it is very important to contact Sigma2 before sending in the application.

New Sigma2 e-Infrastructure allocation period 2024.2, application deadline 29 August 2024

The Sigma2 e-Infrastructure period 2024.2 (01.10.2024 - 30.04.2025) is getting nearer, and the deadline for applications for HPC CPU hours and storage (for both regular and sensitive data), is 29 August. This also includes access to the Sigma2 part of TSD, as well as LUMI-C and LUMI-G.

Please note that although applications for allocations can span multiple allocation periods, they require verification from the applicants prior to each application deadline to be processed by the Resource Allocation Committee for a subsequent period. Hence any existing multi-period application must be verified before the deadline to be evaluated and receive an allocation before the new period starts. This does not apply to LARGE projects.

Kind reminder: If you have many CPU hours remaining in the current period, you should of course try to utilize them asap, but since many users will be doing the same there is likely going to be a resource squeeze and potentially long queue times. The quotas are allocated according to several criteria, of which publications registered to Cristin is an important one (in addition to historical usage). The quotas are based on even use throughout the allocation period. If you think you will be unable to spend all your allocated CPU hours, it is highly appreciated to notify sigma@uninett.no so that the CPU hours may be released for someone else. You may get extra hours if you need more later. For those of you that have run out of hours already, or are about to run out of hours, take a look at the Sigma2 extra allocation page to see how to ask for more. No guarantees of course.

Run

projects

to list project accounts you are able to use.

Run

cost -p nn0815k

to check your allocation (replace 0815 with your project's account name).

Run

cost -p nn0815k --detail

to check your allocation and print consumption for all users of that allocation.

HPC Course week/training

![]()

Norwegian Research Infrastructure Services NRIS has an extensive education and training program to assist existing and future users of our services. UiO has joined NRIS training providing training to all Norwegian HPC users, instead of just focusing on UiO users, this makes it possible to provide a more streamlined and consistent training by consolidating the training events. The courses are aimed to give the participants an understanding of our services as well as using the resources effectively.

There is a HPC on-boarding course coming up October 14-17, in addition to a one-day event: Introduction to NIRD Toolkit on October 24th.

See the following for list of all events: https://documentation.sigma2.no/training/events.html

Training video archive: https://documentation.sigma2.no/training/videos.html

Please do not hesitate to request new topics or uncovered areas of training to use the services more optimal or make your work with our systems easier.

Fox Supercomputer - get access

The Fox cluster is the 'general use' HPC system within Educloud, open to researchers and students at UiO and their external collaborators. There are 24 regular compute nodes with 3,000 total cores and five GPU accelerated nodes with NVIDIA RTX 3090 and NVIDIA A100 cards available. Access to Fox requires having an Educloud user, see registration instructions. About 250 projects have already joined Educloud!

For instructions and guidelines on how to use Fox, see Foxdocs - the Fox User Manual.

Software request form

If you need additional software or want us to upgrade an existing software package, we are happy to do this for you (or help you to install it yourself if you prefer that). In order for us to get all the relevant information and take care of the installation as quick as possible, we have created a software request form. After filling in the form, a ticket will be created in RT and we will get back to you with the installation progress.

To request software, go to the software request form.

Support request form

We have had great experience with users requesting software installations since we introduced the software request form. We now usually get all the information we need at first contact. We want to further improve our support and get to the root cause of an issue faster. Therefore we now encourage you to fill in a form when you need help with other types of issues as well. When the support form is submitted it will be sent to our hpc-drift queue in RT and will be handled as usual. The difference from emailing us directly is that we will now immediately get needed bits of information and your tickets will be labelled according to what system you are on and what issue you are facing.

The link to the new support form will be shown when you log in our servers and it has also been added to relevant documentation pages. You can have a look at it here:

https://nettskjema.no/a/hpc-support

We encourage users of all our HPC resources to use this form. Whether it concerns Fox, LightHPC, ML-nodes, Educloud OnDemand, Galaxy-Fox or our individual appnodes.

Other hardware needs

If you are in need of particular types of hardware (fancy accelerators, GPUs, ARM, Kunluns, Dragens, Graphcore, etc.) not provided through our local infrastructure, please contact us (hpc-drift@usit.uio.no), and we'll try to help you as best we can.

Also, if you have a computational challenge where your laptop is too small but a full-blown HPC solution is a bit of an overkill, it might be worth checking out NREC. This service can provide you with your own dedicated server, with a range of operating systems to choose from.

With the ongoing turmoil about computing architectures we are also looking into RISC-V. The European Processor Initiative is aiming for ARM and RISC-V and UiO needs to stay on top of things.

With the advent of integrated accelerators (formerly known as GPUs) with shared cache-coherent among all execution units including accelerators (like AMD MI300 and NVIDIA Grace/Hopper) these might be of interest for early adopters. Call out if this sounds interesting.

Publication tracker

The Division for Research, Dissemination and Education (RDE) is interested in keeping track of publications where computation on RDE services are involved. We greatly appreciate an email to:

hpc-publications@usit.uio.no

about any publications (including in the general media). If you would like to cite use of our services, please follow this information.

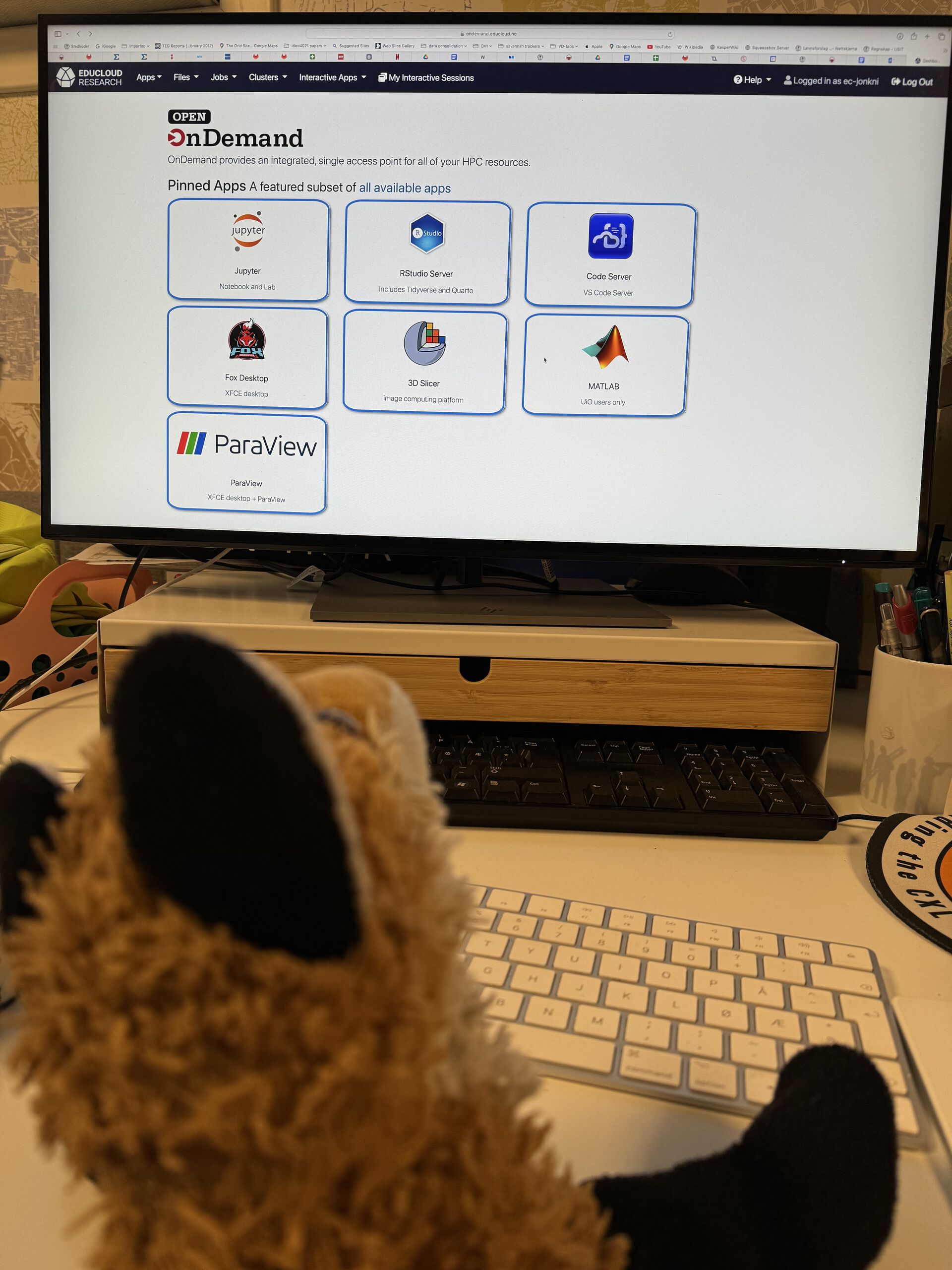

Fox trying out Educloud OnDemand

![]()